What if AI is not inevitable?

Free speech, bias, digital ethics, and the call for a good ol’ yellow light slow down

In grad school this week a few classmates and I, as well as our professor, had an “unlecture” - an open dialogue about what we’ve been discussing in class. It may seem weird for a peace studies program to include a class on the metaverse but the more I’ve learned, the less surprising it is to me. One of the topics that came up was how there’s a desire for more inclusive and diverse spaces (online and IRL) but how - ironically - that desire often includes a “without those other people or opinions” clause. If we want democratic participation, where truly everyone is welcome, and welcome to communicate their opinions without inhibition (as was emphasized in this article about social media use in Ghana elections), then we can’t police how people think and speak. We can disagree with them, or find them offensive…but to, for example, ban books because of content or hire “sensitivity readers” to rewrite books, even if the author is dead in an attempt to make them more inclusive; not only is this a special kind of fragility, it’s dangerous.

Reading Safiya Noble’s work, Algorithms of Oppression, left me with a lot of anger. With various examples of racism, sexism, and anti-semetism coming through Google search results - are Google software engineers, and the lack of diversity at Google (and the tech industry at large), to blame for bias being baked into the system? Or is it the fault of whoever is searching? Or is it nobody’s fault, and instead just a reflection of humans creating technology to be like humans? Is it any surprise that Chat GPT-3 can be mean, when we can be mean, and it’s designed to respond like a human? Why do we expect AI to be free of bias when we know, full well, we are not? Noble’s work points to the dangers and pitfalls of bias being a part of virtual systems. It’s not a call for bias to be eradicated (again - impossible), but her work holds big tech accountable and serves up the reminder that “representations are not just a matter of mirrors, reflections, key-holes. Somebody is making them, and somebody is looking at them” and not only that, but they leave the pages of books and the glow of screens and enter into the real world (Noble, 100). She illustrates this with the example of Dylann Roof, whose google search for "black on white crime" pulled up a list of websites that “confirmed a patently false notion that Black violence on White Americans is an American crisis” and, according to his testimony, furthered his agenda to kill nine Black parishioners at a church in Charleston, SC (Noble, 111). What does it mean about the efficacy of our search engines when people go looking for news about race relations and are met with false, hate-filled, destructive messaging? Were there other search results and Roof was operating from his own confirmation bias - just looking for what he wanted to see, for what would validate his fears? As I write this, the Supreme Court’s Google vs. Gonzalez case could potentially change the nature of free speech online. Section 230 protects platforms like Google, Facebook, YouTube, Twitter etc. from being held legally responsible for what users post, and allows them to moderate content as they see fit. “But as large online platforms have become more central to the country’s political and economic affairs, policymakers have come to doubt whether that shield is still worth keeping intact, at least in its current form. Democrats say the law has given websites a free pass to overlook hate speech and misinformation; Republicans say it lets them suppress right-wing viewpoints.” To relate this back to book banning and posthumous editing- what can be done? Is it okay to rewrite someone else’s opinions? When it comes to censorship, where do we draw the line?

In another article, that doesn’t necessarily negate Noble’s perspective but does dialogue with it, Thilo Hagendorff and Sarah Fabi speak to “Why we need biased AI: How including cognitive biases can enhance AI systems.” In a nutshell they argue that although bias has been most commonly seen as a flaw in AI (as demonstrated in Noble’s work), it is important when it comes to simplifying decision making (Hagendorff & Fabi, 3). They use Chat GPT-3 as an example, citing that it “has been trained on so many text tokens that when trying to produce them all, one would have to speak continuously for 5,000 years”(Hagendorff & Fabi, 4). In other words - some of the limits that humans have when making decisions, like time, computational resources, and possibilities for communication (“meaning that humans cannot directly transfer information from one brain to another'') are important to consider in AI development (Hagendorff & Fabi, 3-4). They propose kinds of cognitive machine biases “that may, similar to human biases, be interpreted as systematic misconceptions, insensitivity to probabilities, or even errors” but that ultimately will help “to make accurate decisions in uncertain situations”(Hagendorff & Fabi, 11). My question is - accurate according to whom? Also, what is the relationship between bias and intuition?

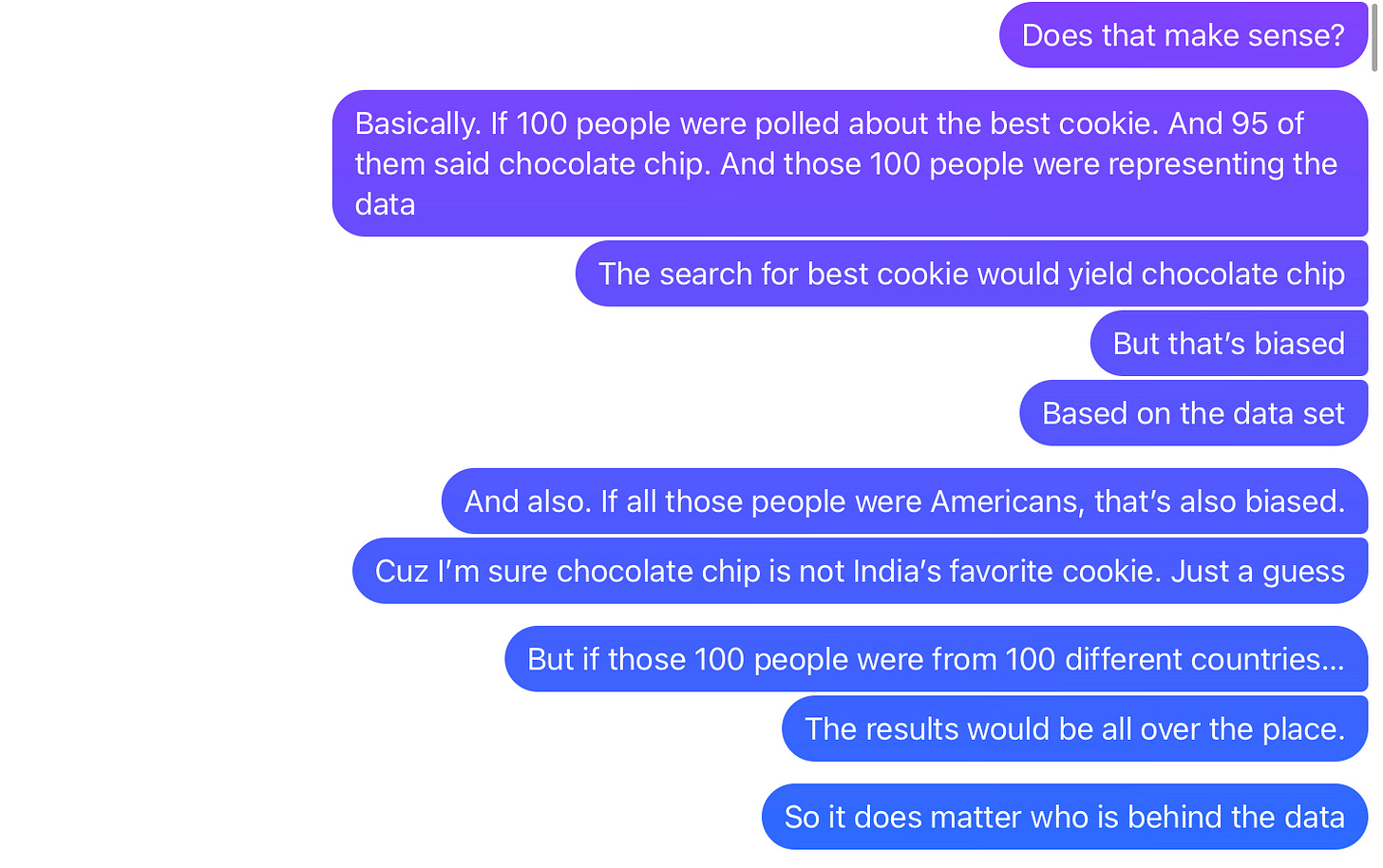

I was talking to my brother (a video game animator who has a lot of experience and interest in AI) about this and he gave a really good example. He said:

“Google is like a trash can. It’s a repository for garbage, but we get to decide what garbage “is” and what we throw out. Everything about having to collect and take out garbage sucks but whatever, it needs to be done and the bin makes it easier. What I DON’T WANT is a solution for not having to do that, which includes a newer better robot that has intuitions about what trash is and what trash isn’t and makes those decisions for me. Because now I have no agency. I don’t know when or what or why it’s throwing stuff out. I can’t control it and it’s doing more harm than good. And now it’s tearing my art off the walls and destroying childhood memories and well, I gave it the power to decide what’s trash and that was supposed to be better for me…but here we are”(Balistrieri).

I’m not sure if this is the sort of bias that Hagendorff and Fabi are championing, or not. But I agree with my brother - I would rather be the one deciding something like that. That said - are we deciding as much as we think we are deciding, given the way ads and algorithms contort our whims and wishes? How might intentionally programming bias differ from the unconscious bias that is already being programmed without awareness? Worth noting is that in another paper of Hagendorff’s he says, “it is wrong to assume that the goal is ethical AI.” Instead, he says, we should be asking “where in an ethical society one should use AI and its inherent principle of predictive modeling and classification at all” (Hagendorff, 852). I bring this up because it’s clear that Hagendorff doesn’t support universal AI. When and where is using AI ethically sound in the first place? It helps me get from point A to point B in my car. I like the song recommendations it gives me. I like our little robot, Ghost, who helps clean all the crumbs off the floor. But. Robots giving sermons? Or fully autonomous fighting robots? Not so much. I’m curious about the work Timnit Gebru is doing, with her company DAIR; the idea of a slow AI movement. As Gebru says, “ We have to figure out how to slow down, and at the same time, invest in people and communities who see an alternative future. Otherwise we’re going to be stuck in this cycle where the next thing has already been proliferated by the time we try to limit its harms.” If it’s a choice to use AI, how will we use it? And who has the privilege to make that choice?

+ Additional Resources

Balistrieri, Eric. “Message to Eva.” Facebook Messenger, 25 February 2023.

Hagendorff, Thilo. “Blind spots in AI ethics.” AI Ethics 2, 851–867 (2022). https://doi-org.wv-o-ursus-proxy02.ursus.maine.edu/10.1007/s43681-021-00122-8

Hagendorff, Thilo & Sarah Fabi. “Why we need biased AI: How including cognitive biases can enhance AI systems” Journal of Experimental & Theoretical Artificial Intelligence, 2023, DOI: 10.1080/0952813X.2023.2178517

Noble, Safiya Umoja. Algorithms of Oppression: How Search Engines Reinforce Racism. New York University Press, 2018. ProQuest Ebook Central. http://ebookcentral.proquest.com/lib/umaine/detail.action?docID=4834260.